Defect #12680

open

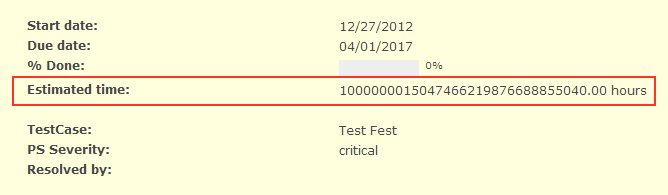

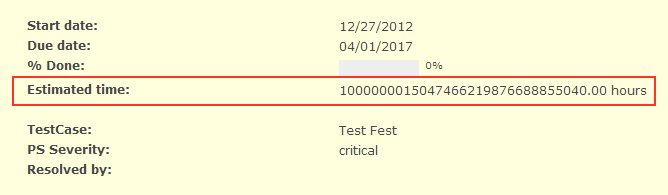

Estimated Time value is corrupted

Added by Konstantin K. over 12 years ago.

Updated about 12 years ago.

Description

Estimated Time value is corrupted.

Steps to reproduce:

1. Open an issue.

2. Set Estimated Time to '999999999999999999999999999999'.

Expected results:

Estimated Time is equal to '999999999999999999999999999999'

Actual results:

Version used:

2.1.4.stable.10927

Files

- Status changed from New to Confirmed

Yes, this happends when we exceed the floating-point precision:

irb(main):001:0> "%02f" % 999999999999999999999999999999.0

=> "1000000000000000019884624838656.000000"

A solution would be to validate the estimated time against a reasonable range of values (eg. 0 - 1000000), what do you think?

Hi Jean-Philippe,

I give some other solution on #12955.

What do you think about changing the datatype from float to decimal, which is much more accurate? We're using decimal in all of hour analytics, because it behaves greater on converting, has a better support for such big numbers without falling back to exponentials and is supported in all databases too.

This would solve this problem without the limitation of the estimated time to some value like 1.000.000.

A numeric column still have a limitation. But the question is: do we really need to store estimated times greater than 1,000,000?

Jean-Philippe Lang wrote:

[...] But the question is: do we really need to store estimated times greater than 1,000,000?

I don't think so. IMO a sane range-validation is the most pragmatic solution to workaround these - extreme edge-case - issues.

But even with some limitation to 1.000.000, the risk of some floating point errors in timelog reports could occur. Well a good limitation would be ok, but the datatype still isn't the best.

Also available in: Atom

PDF